One of the things I really enjoyed during dashboard week was using Alteryx to download information from API’s. It is a very useful skill to learn and DS8 managed to practice it on multiple occasion during the week. This blog aims to go through a process using a few steps I carried out for most of the days during dashboard week. For demonstration purposes I will use Friday’s workflow as an example.

Step 1. What does the URL look like for the API?

An API requires a URL which you need to enter into a search engine to view/download data. I used pollution data from an API owned by Kings College London (KCL). Check it out here. The URL looks like this:

http://api.erg.kcl.ac.uk/AirQuality/Data/SiteSpecies/SiteCode={SITECODE}/SpeciesCode={SPECIESCODE}/StartDate={STARTDATE}/EndDate={ENDDATE}/Json

As you can see you only need to specify the site code (each site in London which records pollution data has a unique code), the species code (each type of pollutant has a unique species code) the start date and end date. So in essence, to obtain data for a specific site code and for a specific species code, you would have to swap the corresponding parts of the URL with the different site and species codes.

Step 2. Obtaining the data for the URL.

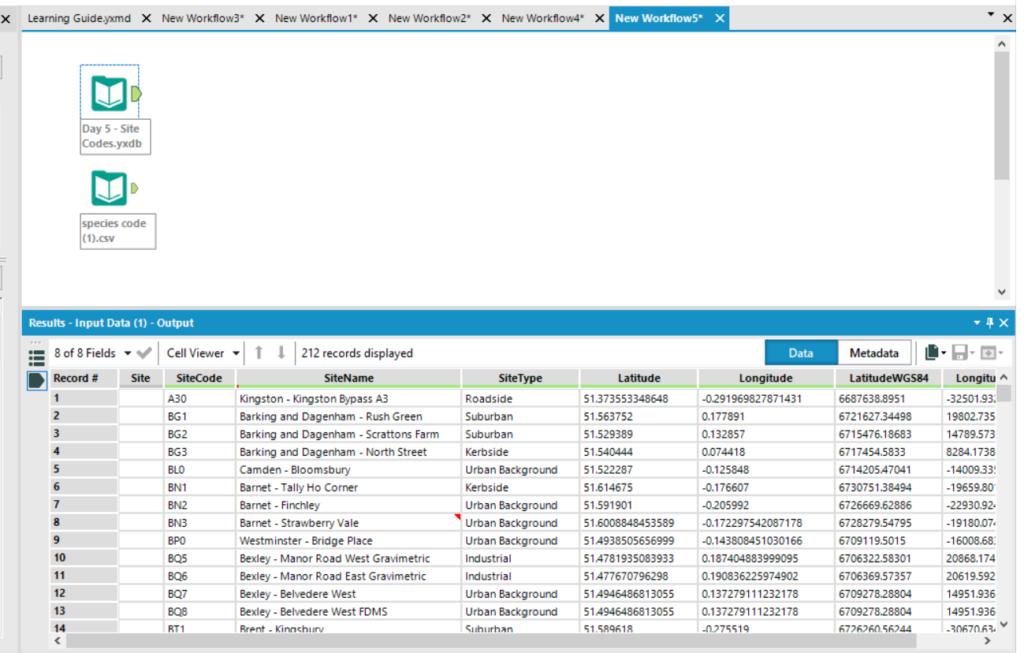

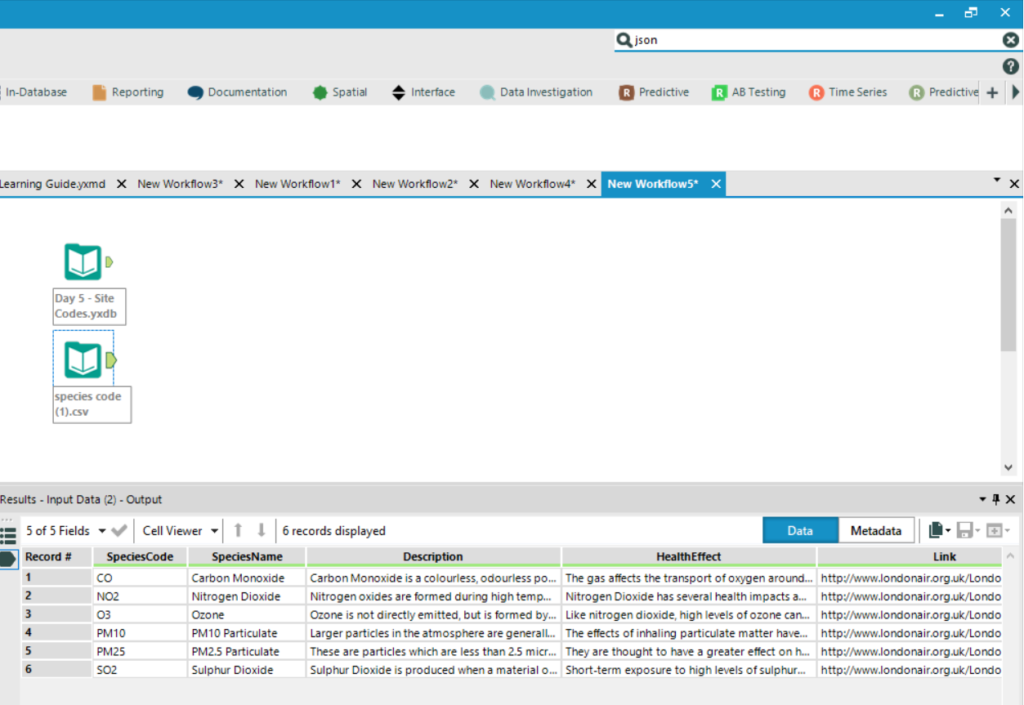

In the last step we found out that we needed to gather a list of all site and specie codes, so this is what I did:

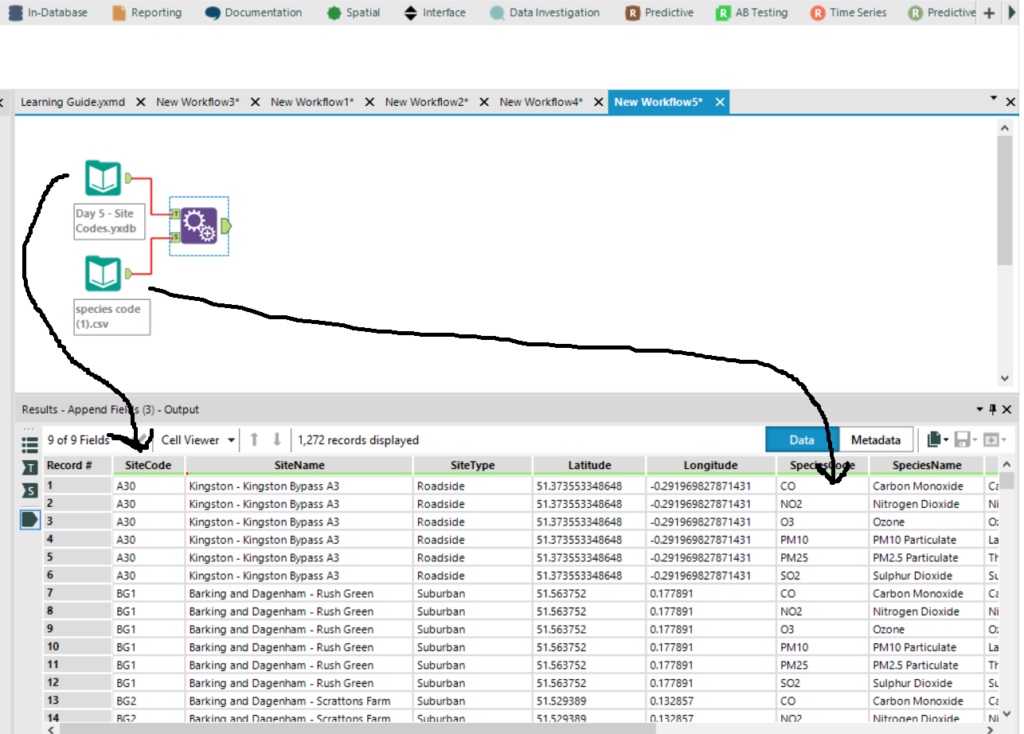

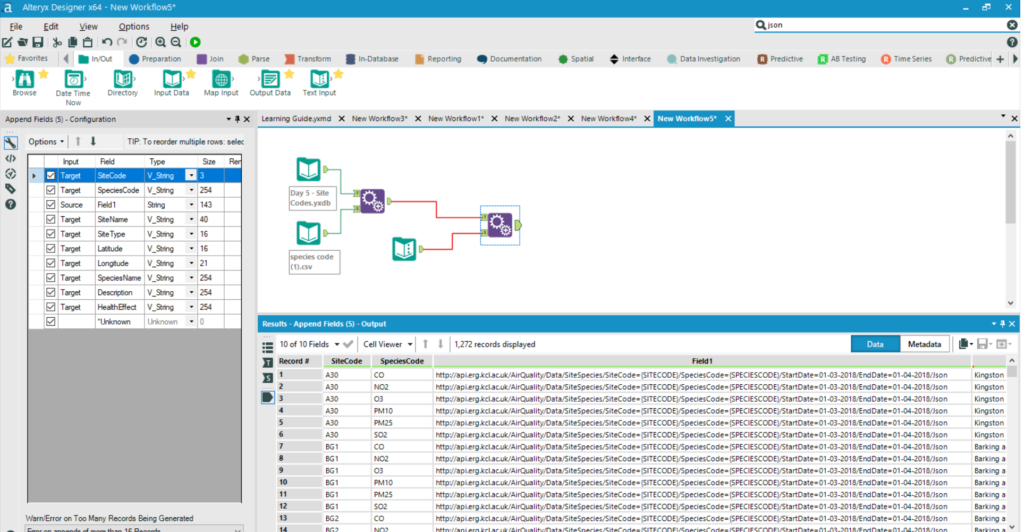

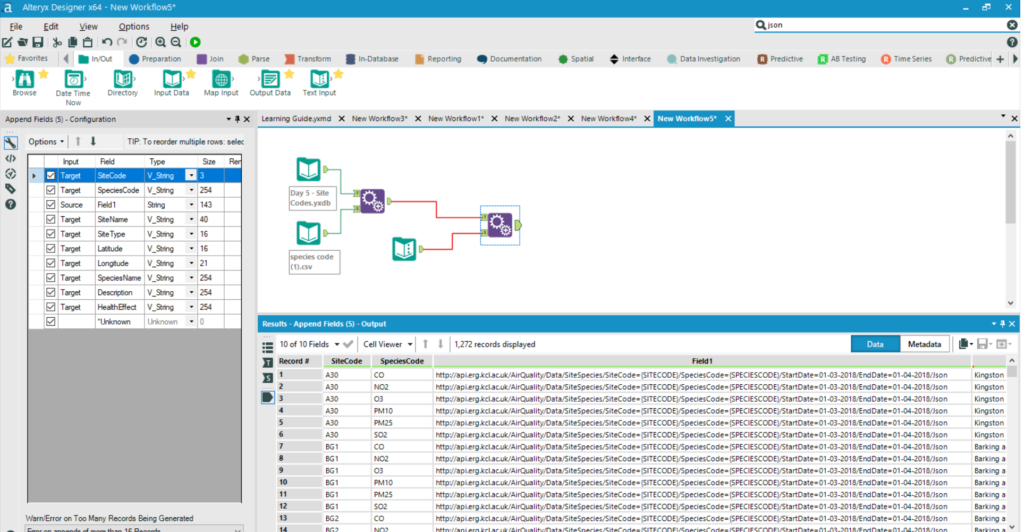

Both the images above have the species and site codes, the very information we need to swap into our URL. As each URL requires a species code and a site code we need to join the data in such a way that we have the species code and site code on the same line. The tool which does this is the Append Fields and it has this effect:

So we are nearly there, as we have the site and species code on the same line.

Step 3. Building up the URL

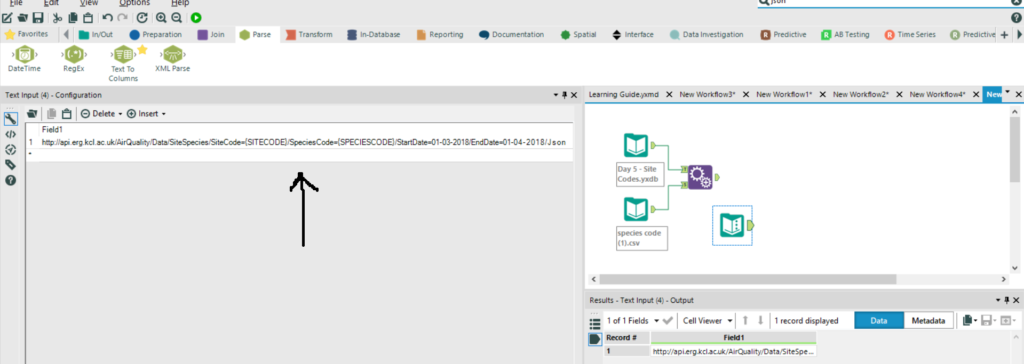

The next step is to insert the URL into a tool which can be joined later on (in this case I only wanted to obtain pollution data for one month, hence the start and end date will remain constant in the URL). The text input tool below does this for us as you can see below:

Joining this up gives us the URL, species and site code on one row.

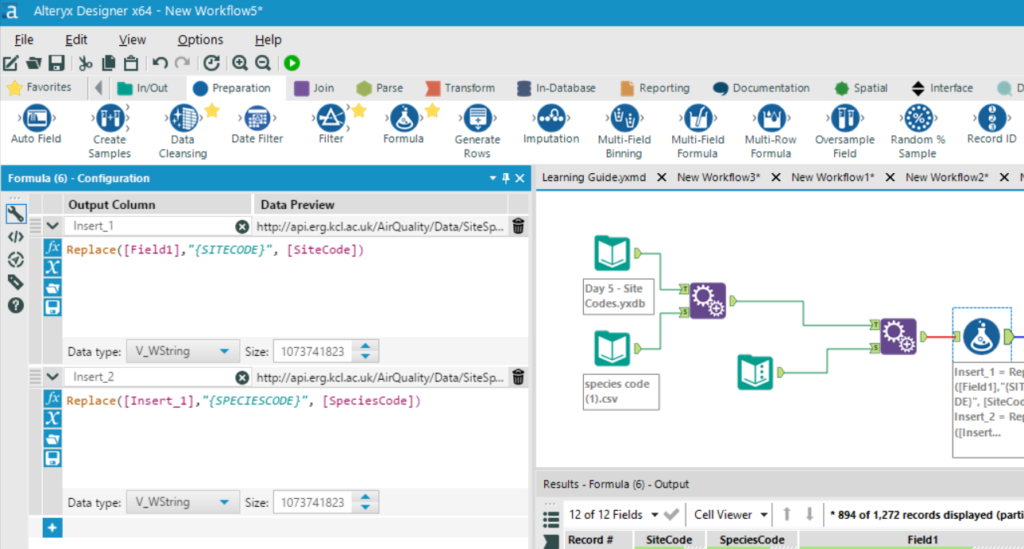

The next sub step is to insert the codes into the URL by replacing parts of the URL. This can be done using the formula tool which literally replaces parts of the URL:

Which results in list of all the URL’s we need to pass to the API (under the Field 1 header):

At this point the data can now be downloaded using a download tool.

Summary

In summary there are 3 steps which could help break down the process of downloading data from an API:

Step 1. What does the URL look like for the API?

Step 2. Obtaining the data for the URL.

Step 3. Building up the URL.

I hope this will help users who are new to Alteryx using API’s. Feel free to get into contact with me via twitter and Linkedin if you have anything to say.