Before, I wrote a blog to share how to translate the data values in the data source into multiple languages using a Python script in Tableau Desktop. If you haven't read it, you can go to that blog here.

Continue to that blog, how about translating all field names in the data source to other languages?

Can I use the parameter in Tableau Desktop to dynamically switch the language?

Any issues with doing that?

To answer those questions, I will write this blog to share how to translate the field name in the data source in Tableau Desktop by going through:

1/ Set up the environment

2/ Write a Python script to translate the field name

3/ Set up Tableau Desktop

4/ Apply the Python script to translate the field name

5/ Summary and discussion

I learned some techniques from the DataDevQuest challenge. Specifically, it's from the intermediate challenge DDQ-2025-06 that Paula Muñoz created.

1/ Set up the environment

To apply the Python script in Tableau Desktop, you need to install TabPy and activate it later. If you haven't installed it, you can install it from the command:

pip install tabpy

To translate the text into multiple languages, I used the deep-translator package. You can read the documentation of that package here. You can install that package with the command:

pip install deep-translator

Later, in Tableau Desktop, I need to use the parameter in the Python script in the Table Extension with Custom SQL. To do that, I need duckdb - a lightweight SQL database to create a temp database for parameters. To install duckdb, you only need to run the command:

pip install duckdb

We also need the pandas package for the pandas data frame. If you haven't installed it, install pandas with this command:

pip install pandas

The sample data source I used in this blog is Superstore. If you have already installed those 4 packages and the Superstore dataset is ready, let's Get Started!

2/ Write a Python script to translate the field name

I used Visual Studio Code for Jupyter Notebook, but you can use any Python IDEs to test the script. The script is quite similar to the script I shared in the previous blog. The difference is that now we translate the column name, not the data values.

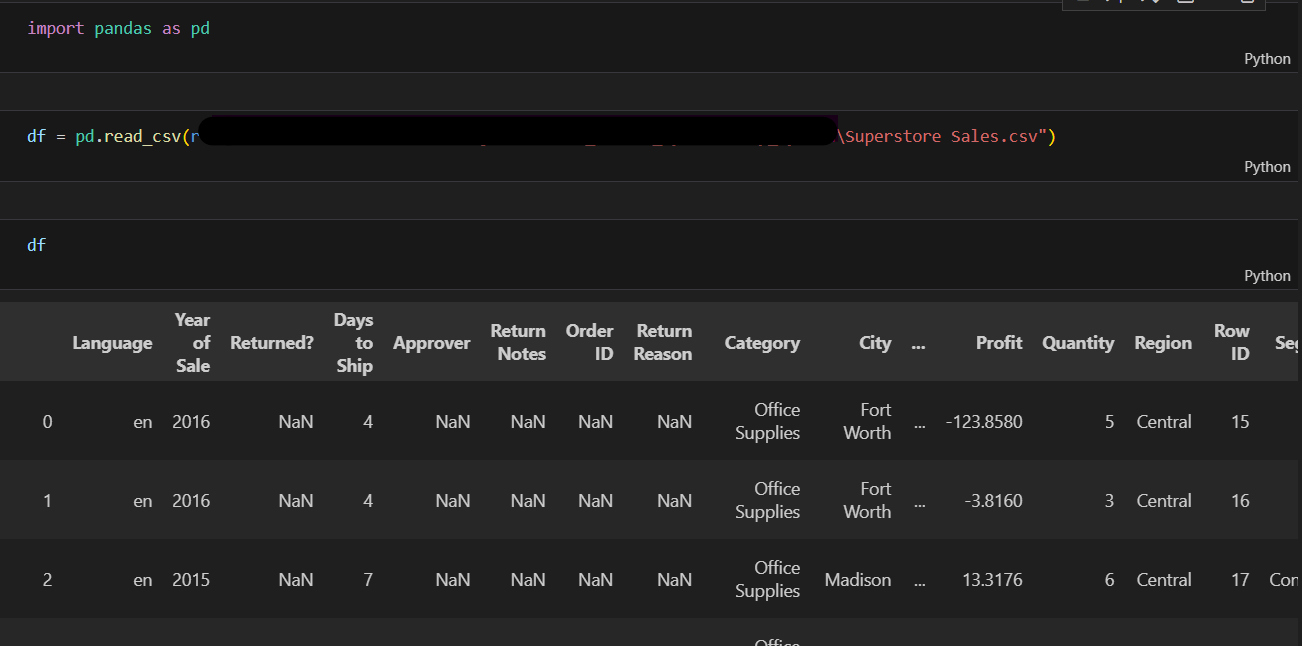

In Fig. 1, I read the CSV file using the Pandas package. I also created a new column "Language" with the value "en" for English.

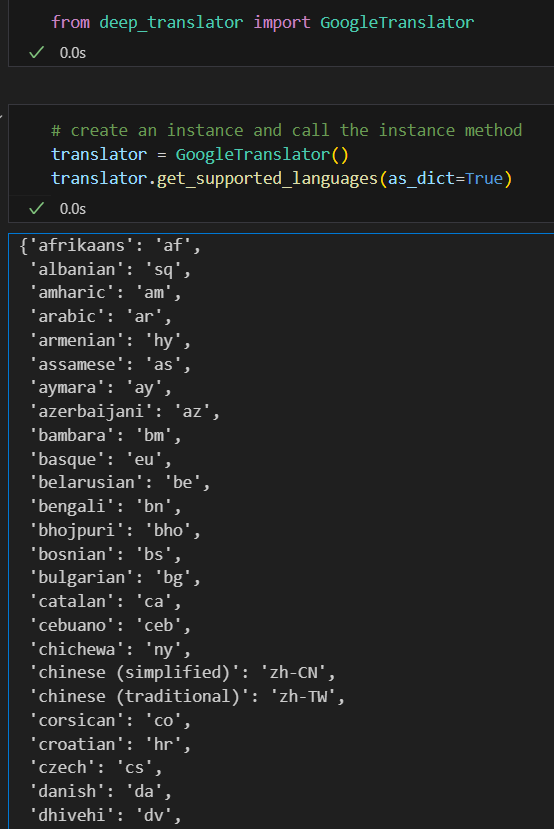

Next, I'd like to check the supported language code to translate. I will import the GoogleTranslator method from the deep_translator package that we installed in the first part.

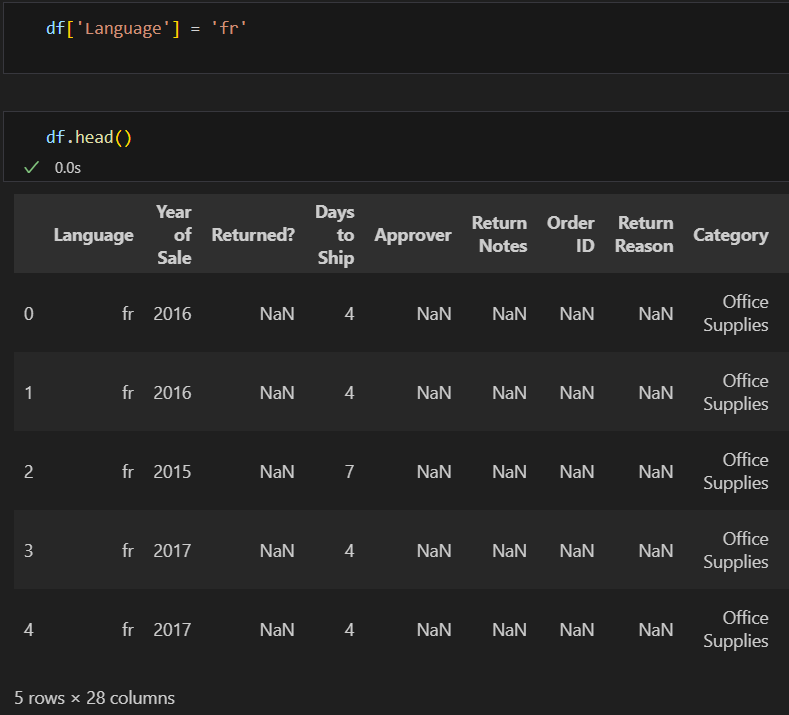

Now, I'd like to test with the French language. I will assign the value "fr' to df['Language'].

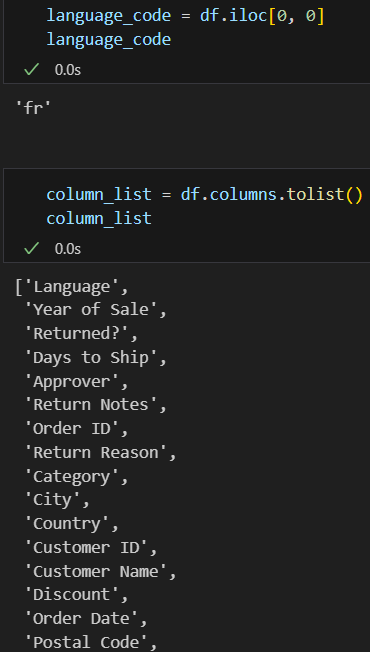

Next, I want to get the "fr" value as the language code from df, and I also want to get the list of column names to translate.

After I got the language code and the list of column names, and stored them in variables language_code and column_list.

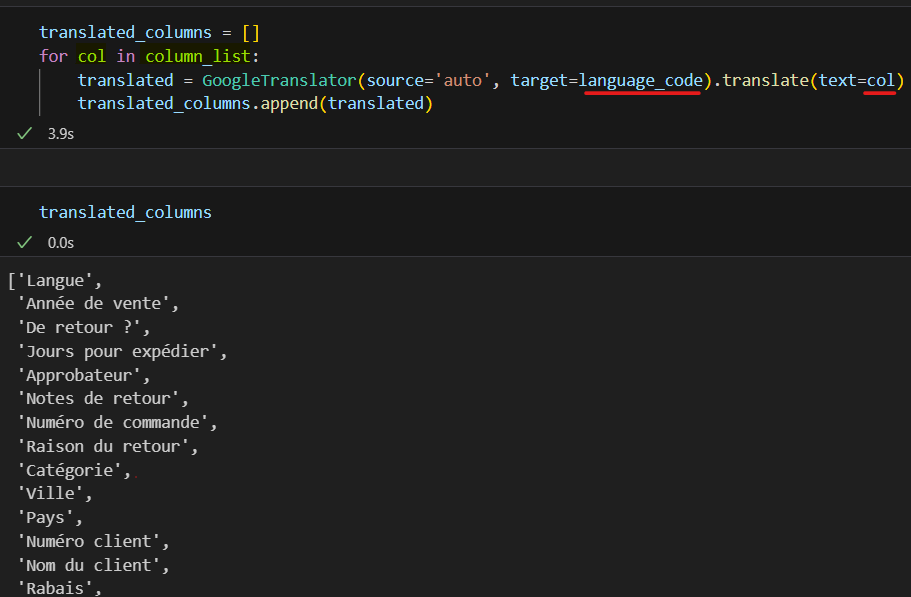

In the next step, I initialized an empty list (translated_columns) to store all translated column names. Then, iterate over each value in the column_list. I called the GoogleTranslator method and passed 2 arguments (source and target). The source is set to auto to auto-detect the language. The target is the language code that we want to translate to. I put the language_code that we did above.

Then, apply the "translate" method to let the translator know which text needs to be translated. Here, the text is the value in the column_list "col". After that, I appended the translated values back to the translated_columns list.

In Fig.5, when I output the translated_columns list, you see the column names were already translated.

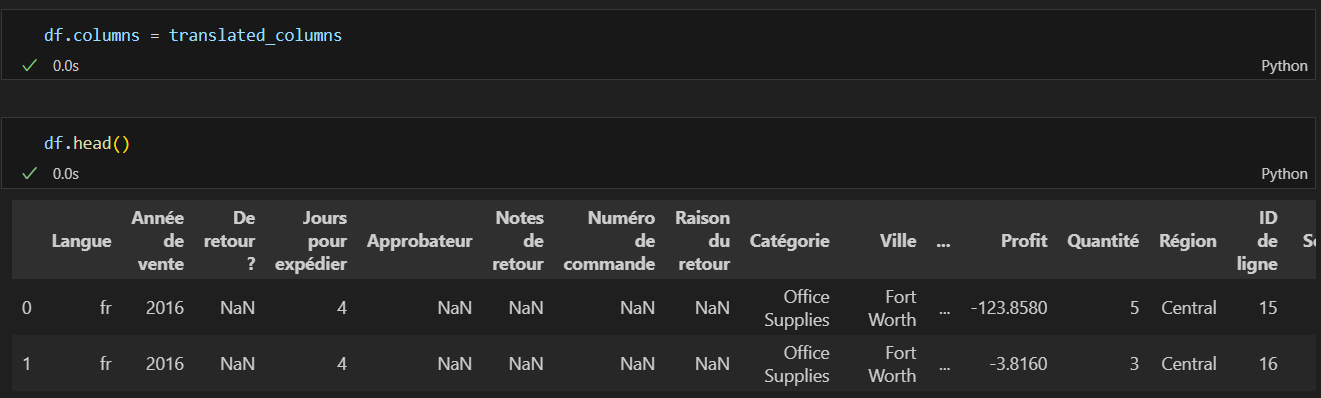

In the last step, I will assign the translated_columns list back to the current columns in the data frame df (Fig. 6).

Now, we have the idea of how the Python script works to translate the column name. The Language column would be our parameter to switch the language, as it will change the target parameter in the GoogleTranslator method.

Let's move to Tableau Desktop to set it up.

3/ Set up in Tableau Desktop

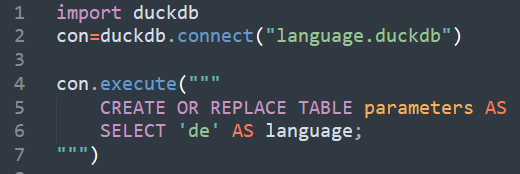

Before going to Tableau Desktop, I will set up duckdb first. It will help me handle the parameter to switch languages. I learned this from the DataDevQuest challenge. Now, I apply it to this challenge. Thank you, Paula :)

I created a new Python file called duckdb_input.py. In this file, I created a connection to the duckdb. It will create a table parameter with an initialized value "de" for the language. Save it. Then, run this Python file in the terminal.

python duckdb_input.py

In the folder, you will see it created a new database file called "language.duckdb".

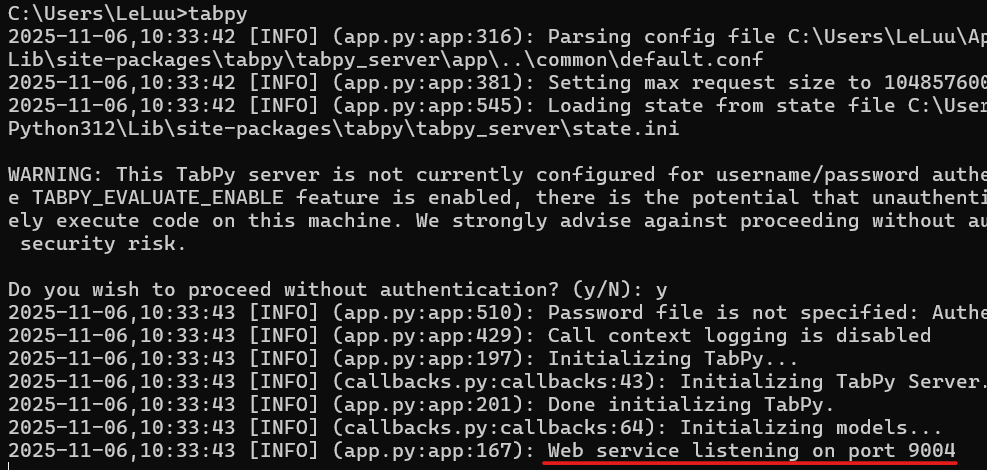

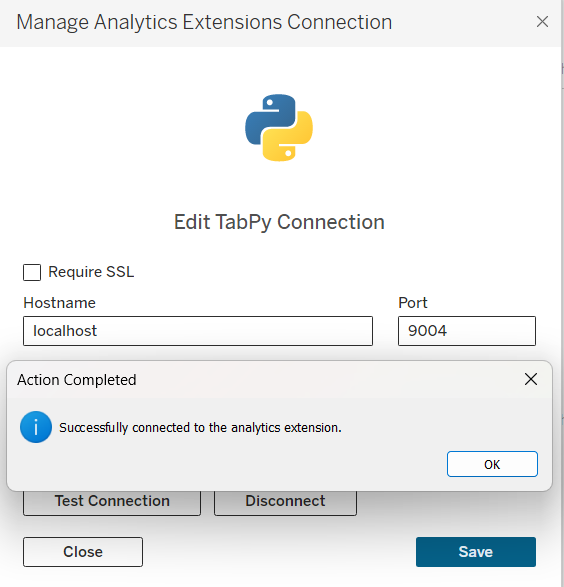

Now, activate tabpy in the terminal by typing tabpy. Make sure that the service is listening on port (by default, the port number is 9004 for localhost).

Now, open Tableau Desktop and connect to the sample Superstore data source. Then, go to Help > Settings and Performance > Manage Analytics Extensions Connection. Fill in the Hostname and Port number. If you are running on your local computer, the Hostname is localhost and the port is 9004.

I will create 2 new parameters:

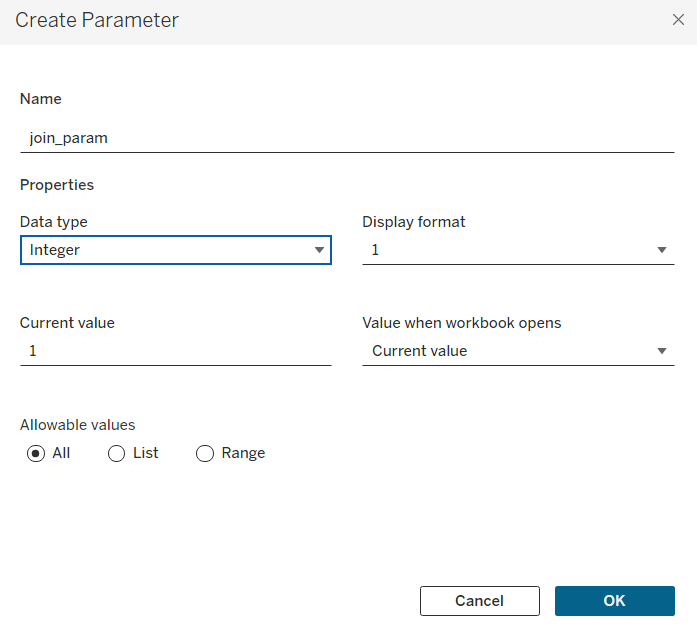

1/ join_param Parameter

Create a parameter called join_param with the default value is an integer 1.

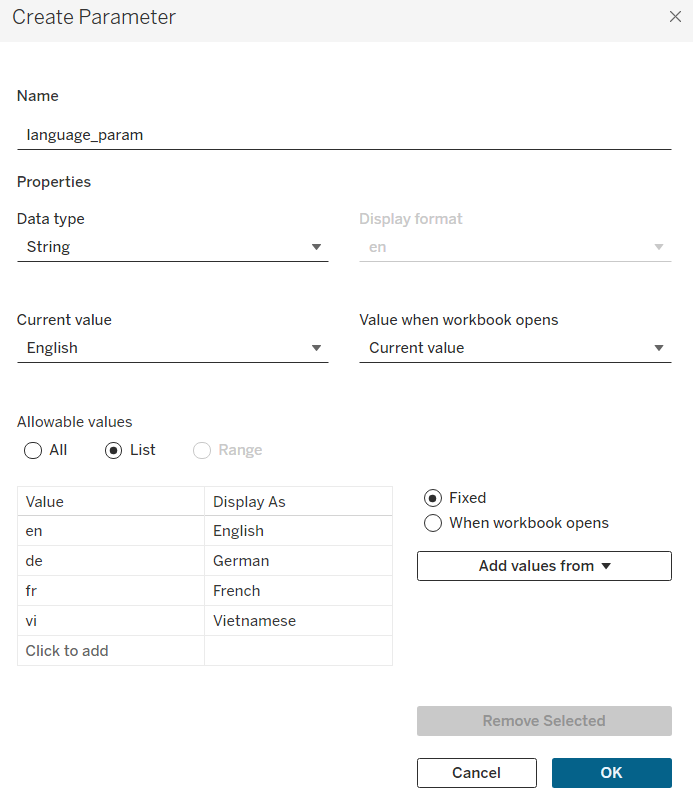

2/ language_param Parameter

I create a new parameter called language_param. This string parameter will store all language codes to plug into the Python script later.

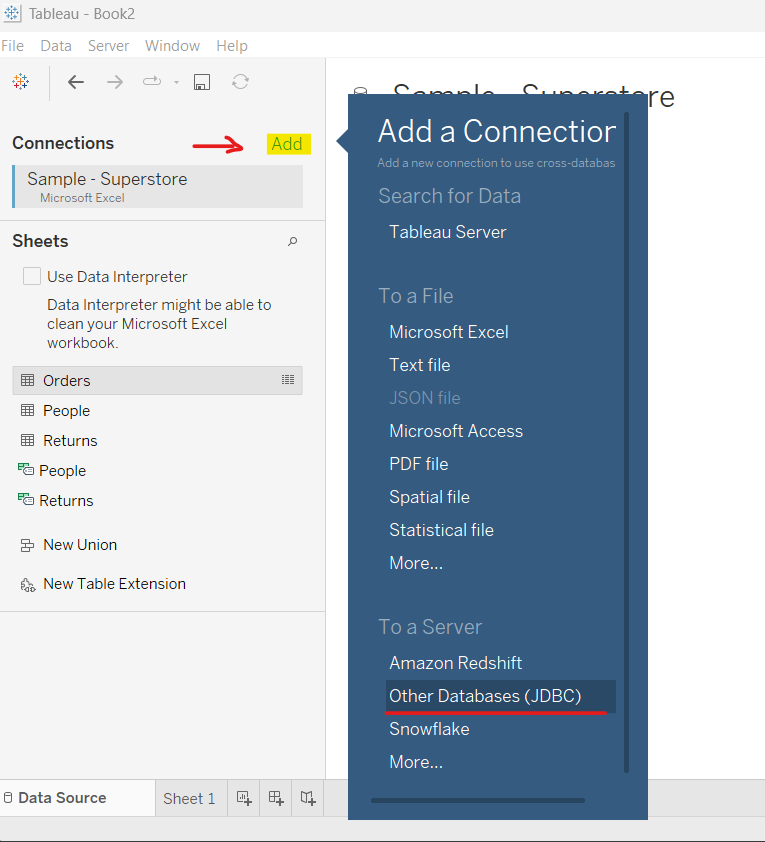

Now, go back to the Data Source pane. Then, select the Add button to add another connection and choose Other Databases (JDBC). This will help us add the duckdb database that we created from the Python script above.

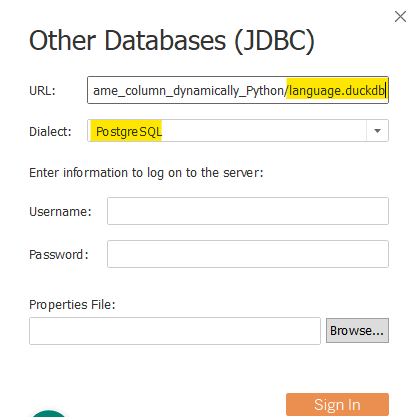

After you click on the Other Databases (JDBC), a pop-up window will show like this:

In the URL box, it starts with this syntax:

jdbc:duckdb:/Users/<Your User Name>/.... /language.duckdb

Make sure that you enter the correct directory for the language.duckdb database. Note that the forward slash is "/". In Dialect, choose PostgreSQL. Then, click on the Sign In button.

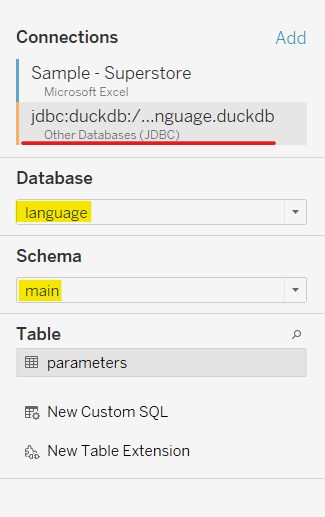

If you successfully add the duckdb connection, it will show:

Then, you select the Database language, which is the database we created in the Python script for language_input.py. The Schema is main.

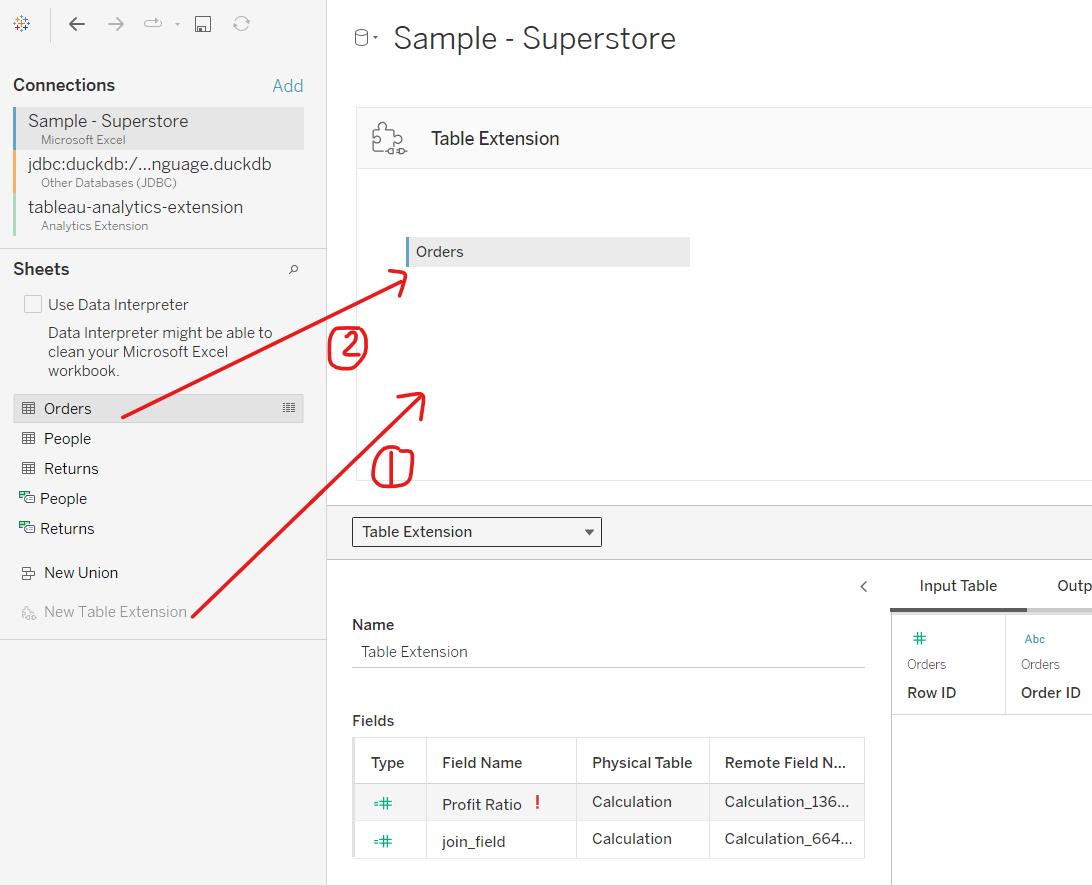

Next, I drag and drop the New Table Extension to the blank canvas on the right.

The Table Extension window looks like fig. 15. Then, drag and drop the Orders table into it.

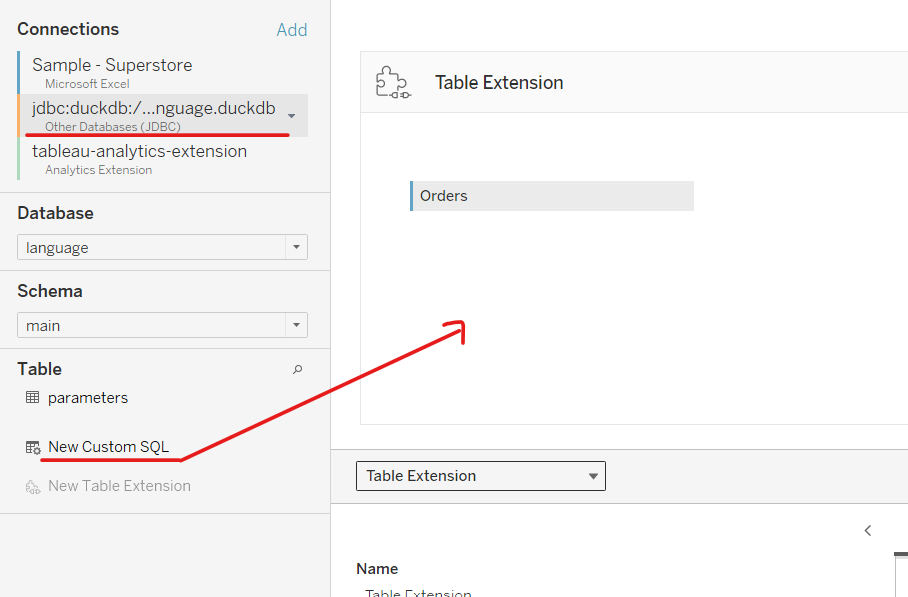

Now, switch to the duckdb connection.

Then, drag and drop New Custom SQL in the Table area to the canvas (same place as the Orders table). This SQL table will connect with Orders. It will help add the language code and the join field (integer 1) to the data frame later.

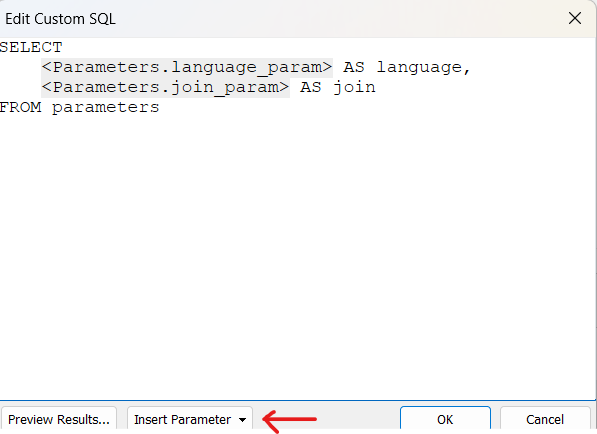

You can insert the parameter from the drop-down menu at the bottom. There are 2 fields for this table (language and join_field). Then, click on OK. It will show an error message. But this is ok, as we will set up the relationship next.

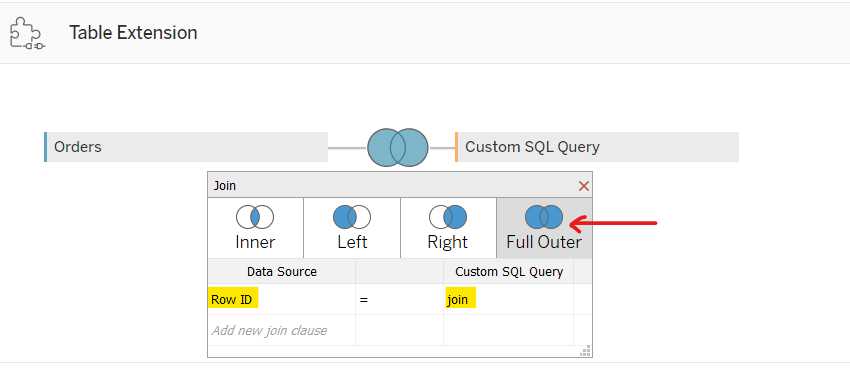

Click on the join symbol, then choose Full Outer. We will do a cross join of 2 tables. In Data Source, I chose Row ID. In Custom SQL Query, I chose join.

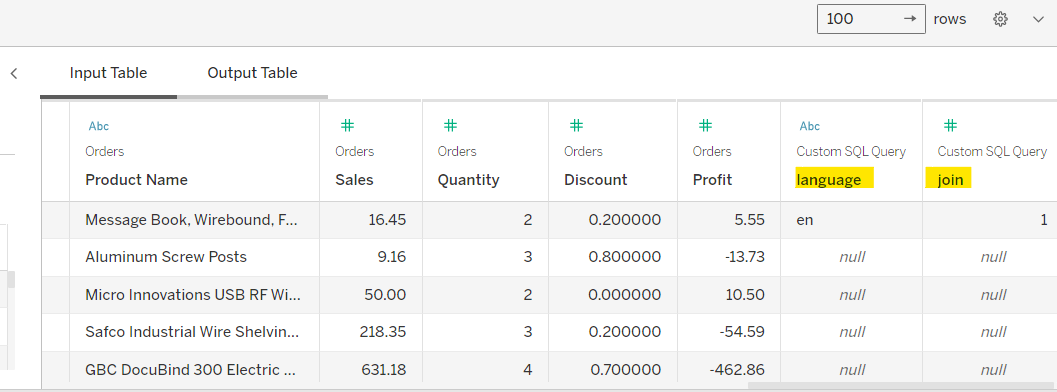

Now, at the bottom, you will see it has already combined 2 tables.

All set up is good now, let's apply the Python script here.

4/ Apply the Python script to translate the field name

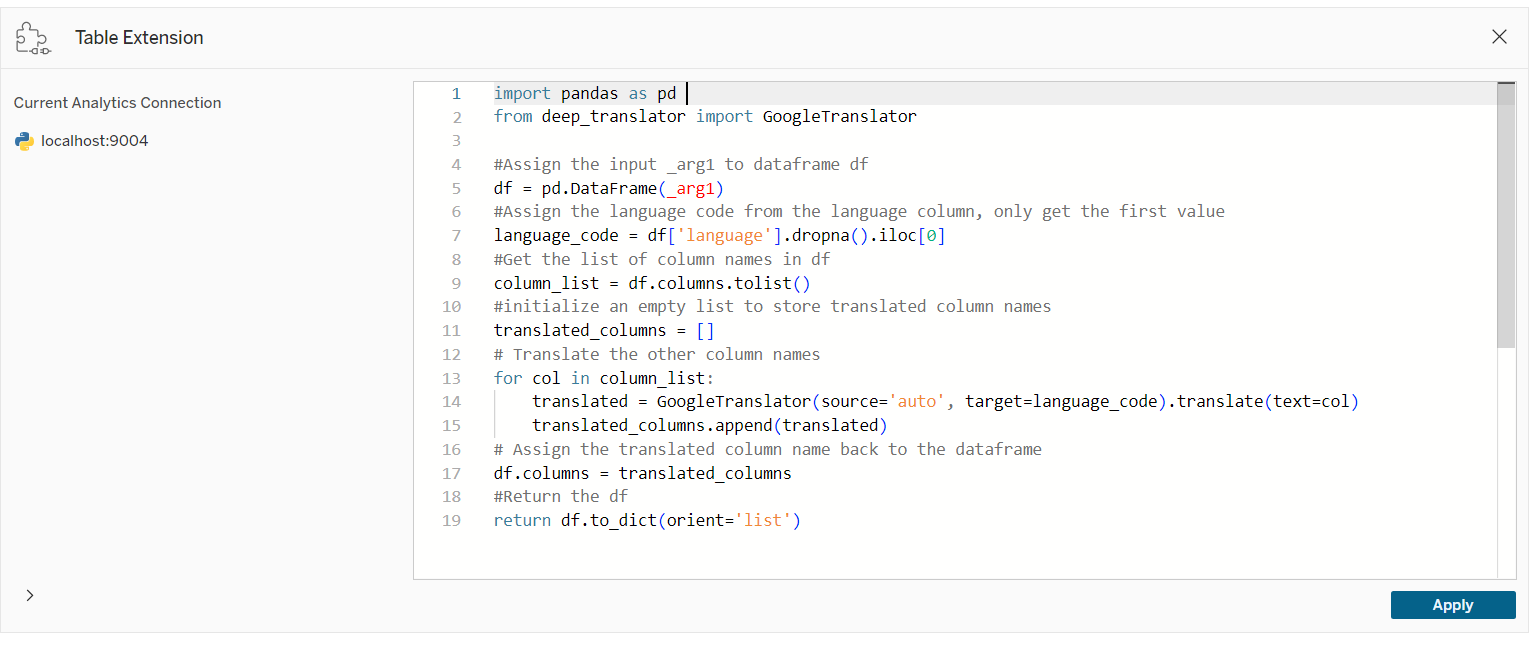

In the Python script box at the top, I input the Python script there.

In the Python script, the input is arg1, which is the input table in Fig. 19. I stored it in the pandas data frame df. Then, copy the code I did in Visual Studio Code here. At the return, I will return as a dictionary (df.todict). Finally, click on Apply.

Now, you can test it in Sheet1.

5/ Summary and discussion

I hope this blog is helpful if you are looking for a way to translate the field name and dynamically switch languages. There are some pros and cons that I summarized after testing this.

Pros:

- Dynamically switch the language from a parameter to translate the field name

- Don't have to translate each field name or join multiple tables for different languages

- Column names are not as many as data rows, so take a few seconds when switching languages.

- Don't need to add a calculated field to switch the language

- Easy to add/ remove columns, calculations from the Python script

Cons:

- The Python script replaced the old fields with the new fields. It means that after adding fields on Rows/ Columns, switching to another language will crash the view. Need to "replace references" for each field in the view

- Take many steps to set up to get the result

- Only applying to the data source from local files or pulling data from APIs, not from Tableau Cloud/ Server

- The structure of the hierarchy from the beginning is broken. For example, in Product, there are 2 fields: Product ID and Product Name. After translating, each field is not in the Product hierarchy.

- The Python script ran from the data source in Table Extension. If adding a new calculated field, this new field cannot be translated when switching the language in the parameter.

However, if you are working with a team from multiple countries in the world, translating the field name would be helpful in building the visualization and calculation for the team.

How do you think about this topic? Feel free to share your thoughts! See you in the next blog!