On Thursday of week 1, Tableau Zen Master Peter Gilks came to visit us to teach us about data exploration.Pretty much any project I have approached previously was with a predefined goal. There were clear questions that needed to be answered and that shaped the way I treated the data. As a social researcher you have a hypothesis and are looking for specific evidence to either support or reject it. How this will be done should be clear when the research is designed. The first time I truly explored data I was not familiar with was for stage two of the application process for the Data School.

On that occasion I spent the first two days of the project investigating the data set and looking for patterns. Peter gave us 15 minutes, at the end of which we were asked to present our findings.

I made a mess of it.

I was on track, and found an irregular data point that Peter had placed deliberately fairly quickly into the process. More out of luck than a systematic search process but I was nonetheless proud of myself. From there it went downhill. I got distracted, started looking at other aspects of the data set rather than staying on the same path, created multiple workbooks that said absolutely nothing. More importantly I didn’t even exclude the erroneous data point, which skewed the entire data set and made subsequent conclusions meaningless.

When it came to presenting my findings Peter did a fantastic job at taking the shambles that I had created and using them to make a complete picture. He explored with us how he would have built on my first approaches, picking out some of the things that I had done well and explaining how he uses these approaches systematically to find stories in data that he is given. To a large extent this involves looking at overall trends, then focussing on those examples that stand out, drilling down in to these and using other variables to explain why they might be outliers.

As somewhat of a perfectionist, and a rather competitive person, I don’t like failing a task. However, I don’t think I have ever been in an environment where I was as comfortable messing up, and it is great that the Data School managed to achieve this great learning atmosphere after only a few days. In addition, I learned a lot from this failed task, due to the way that Peter talked through the results with us and made me follow along with his suggestions in my workbook. The next time I approach a new data set I will have a much better idea of the steps I should be taking to make sense of it.

Another lesson that I take away is to be sceptical of the quality of a dataset and always perform checks at the start. We had all naively assumed that the numbers we had been given by Peter were accurate, and none of us noticed that in fact he had meddled with the dataset to the extent that made all our conclusions void, introducing duplicate data that none of us had checked for.

So, turns out Cindy didn’t actually get that 140% discount on her printer order…

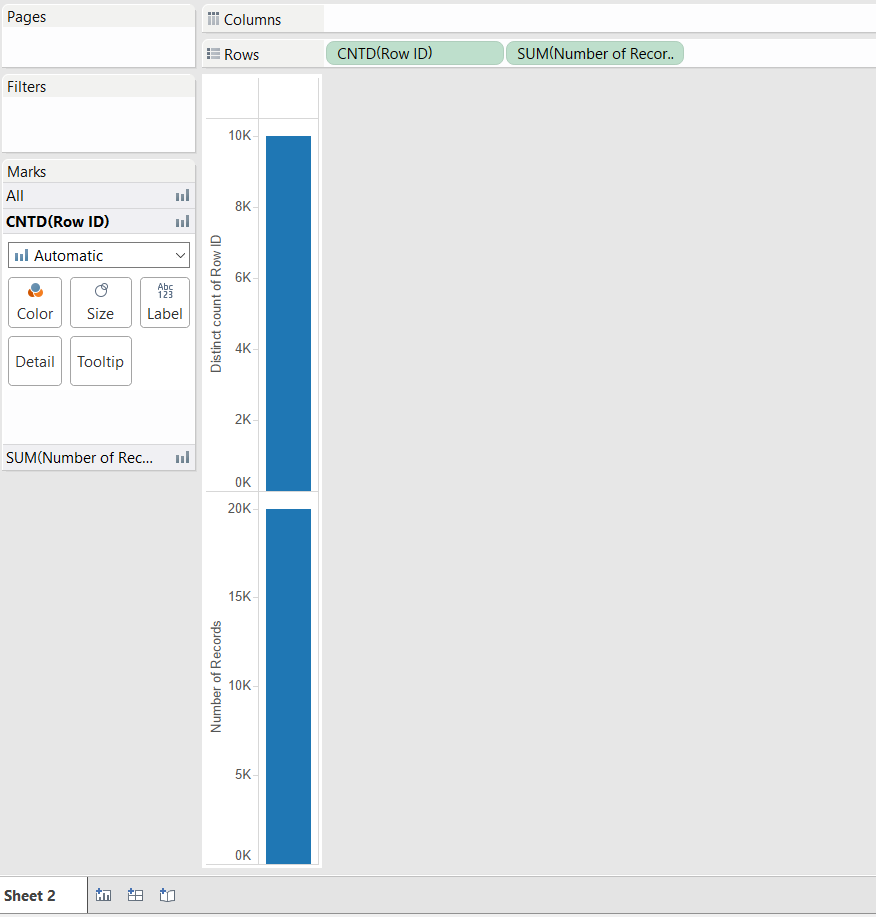

In order to check for duplicates, you can simply pull in your “Number of records” as well as the distinct count of your variable with the lowest granularity. In this case that was the “Row ID”. This showed that these two numbers did not actually add up and a closer look at the underlying data then revealed that the data had duplicate entries.

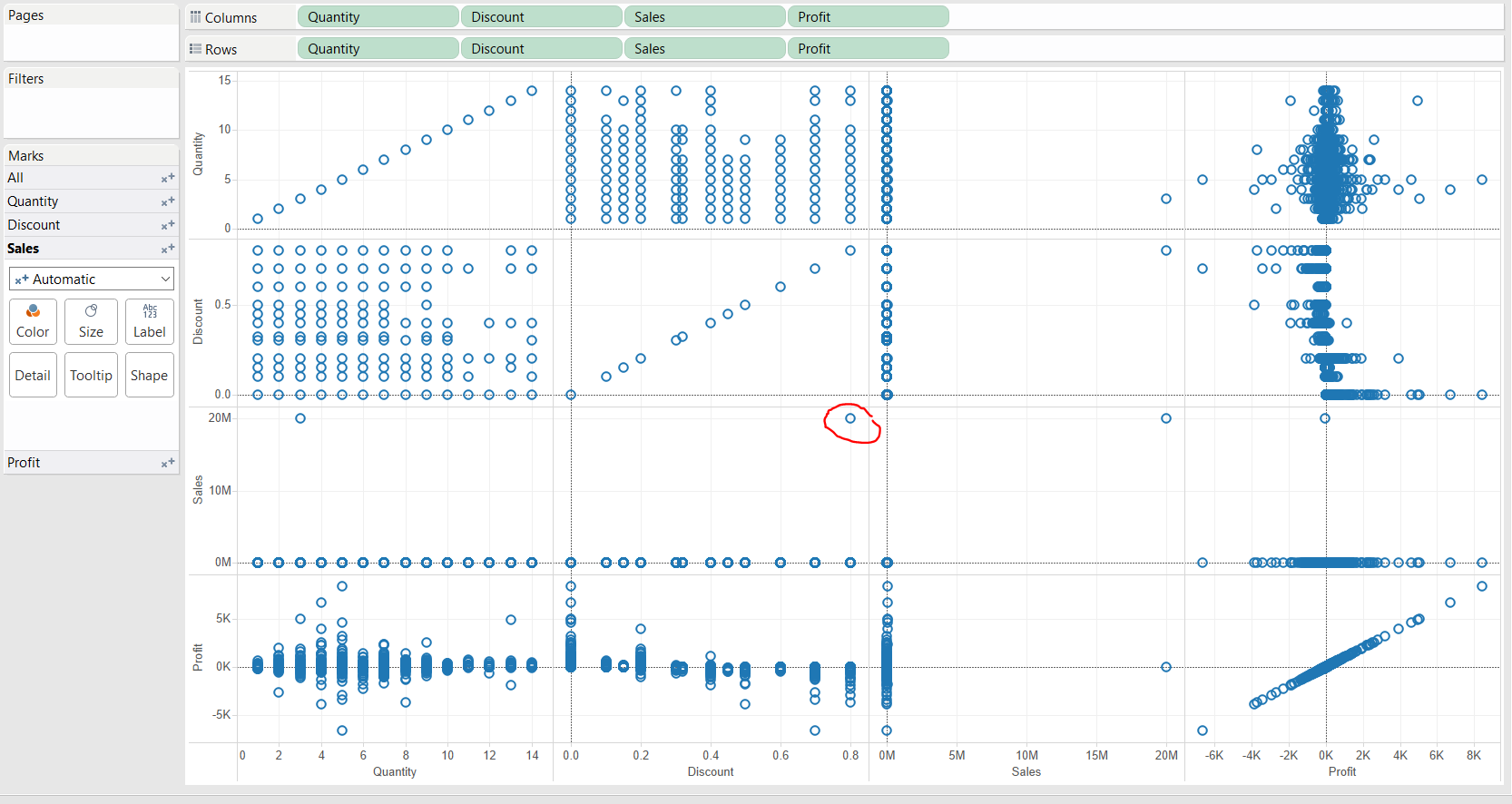

Peter’s second piece of advice was to check for outliers with scatter plots, including all measures of the dataset in a single sheet. In the picture below I have marked the outlier in one of the views. With these scatter plots it stands out immediately and once this erratic data is excluded the distributions normalise and provide a great oversight of the overall data patterns.

Check out Peter’s blog at http://paintbynumbersblog.blogspot.co.uk/