Today's challenge was mostly about data prep. Cheese.com sounds like a website that got its domain in 1999 and hasn't been updated since 2006, but lo and behold, the website we were tasked with scraping is a very current and detailed guide to all things cheesy.

The list of cheeses was split up alphabetically, with some letters having multiple pages of cheeses.

To get round this, I used a batch macro to update the letter and page number of the URL before webscraping the data and parsing it out using RegEx. I probably could have been a bit more efficient with my parsing, as the main workflow got a liiiittle bit too long.

I used a text input for the letters and page numbers, but a more dynamic way I could try would be to use an iterative macro to generate the links - maybe even scraping the website first to find out the maximum number of pages for each cheese.

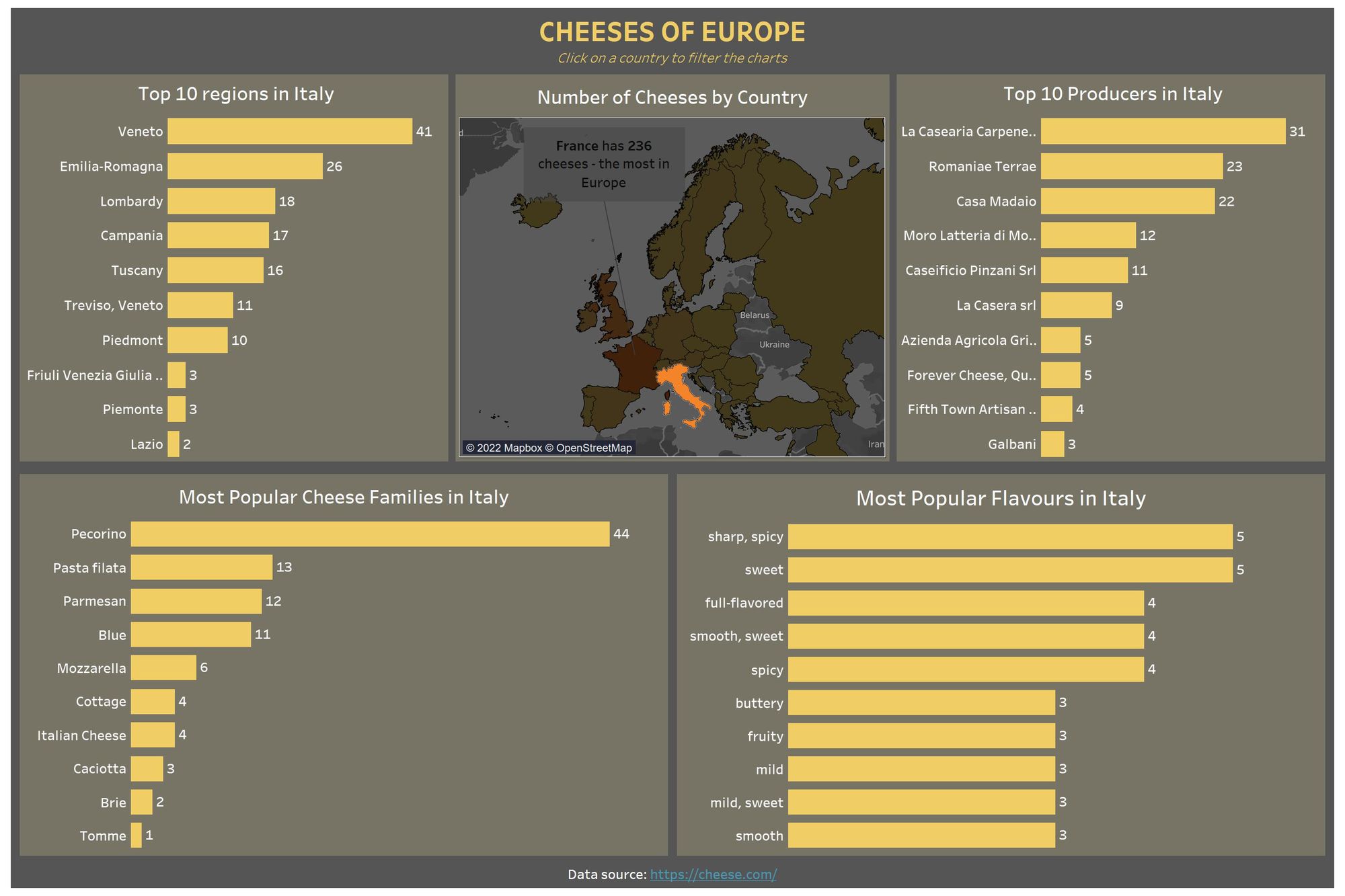

The dashboard I created with this data was pretty simple and exploratory - essentially, a cheese enthusiast could explore what kinds of cheeses were produced the most in other countries, so they know what to try out if they ever go. The dashboard filters when you click on a specific country, but it would be nice to also incorporate some more filters for other variables.